Keyword research is arguably the most important step in the SEO process. It’s the perfect antidote to Google’s recent algorithm updates making it increasingly expensive, complicated, and risky to attempt to “punch above your weight” by manipulating your way to the top of the search engine results. Or at least, where the competition is weak enough that ranking won’t take every trick in the SEO book.

Despite technological advances, even the best SEO software is still just a shadow on the cave wall compared to the complexity of Google rankings. That’s why it’s incredibly important when checking keyword difficulty to understand the accuracy of the keyword difficulty tools you are using.

One of the fundamental principles underlying CanIRank is that less SEO is better SEO. And one of our goals was to create the single best measure of keyword difficulty so that users can tell at a glance whether or not their site is competitive for a given keyword. The result is the CanIRank Ranking Probability score, the first measure of keyword difficulty that assesses keyword difficulty relative to your website.

CanIRank’s Ranking Probability is more actionable than traditional Keyword Difficulty scores.

Other keyword difficulty tools will tell you that “home loan” and “SEO” both have approximately the same keyword difficulty (85-87%). CanIRank will tell you that QuickenLoans.com scores a 100% Ranking Probability for “home loan” but only 77% for “SEO”, while SEOBook.com has a 95% Ranking Probability for “SEO”, but only a 72% chance of competing for “home loan”.

As a site owner, getting keyword difficulty scores relative to your website is both more accurate and more actionable.

Not only are accurate Ranking Probability predictions important for helping to guide you in making the right decisions, but they’re also a reflection of our understanding of search engine ranking factors. When CanIRank makes accurate predictions, it suggests our ranking models do a good job capturing the impact of various ranking factors. This understanding of ranking factors is what enables us to then draw meaningful comparisons between websites, highlight areas in need of improvement, and recommend Actions that will have the greatest impact on your site’s ranking.

So we’ve invested a lot of time, money, and research into creating what we feel are the most sophisticated ranking models in the SEO universe.

How Accurate is CanIRank’s Keyword Difficulty Tool?

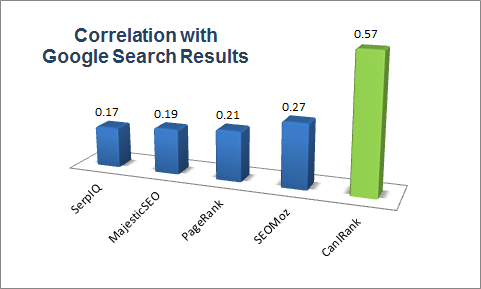

The short answer is that while no predictions are perfect (just ask the weatherman), CanIRank is over twice as accurate as other keyword difficulty tools, and can be used to explain a majority of the ranking differences between websites.

More specifically, as a measure of how competitive a given web page will be in the Google search results, CanIRank’s Ranking Probability score is:

- 109% more accurate than SEOMoz Page Authority

- 170% more accurate than Google PageRank

- 202% more accurate than MajesticSEO CitationFlow

- 167% more accurate than SerpIQ Competition Index

Before I dive into the analysis, I’d like to emphasize that all of the above are excellent and useful SEO tools, and this post is in no way an attempt to disparage or put down competing offerings. While CanIRank comes away looking pretty good in this study, it’s far from an exhaustive analysis of keyword difficulty tools, and all of the tools have their own strengths and weaknesses which I hope you’ll explore to determine what best meets your needs.

Comparing Top Keyword Difficulty Tools

I based this experiment on the notion that an ideal metric for a page’s competitiveness would have a perfect rank order correlation to Google search results. That is, for any given keyword the #1 result in Google should always have a higher competitiveness score than the #2 result, which should have a higher score than the #3 result, and so on. Perfectly ranking 40 search results in the correct order would require that a metric encapsulates many of the same factors that Google considers, and also weights them similarly. In other words, it’s quite a rigorous standard for a competitive analysis tool, especially as it’s not as important that we can correctly order a #3 result before a #4 result as it is that we can tell when a URL is likely to rank #4 and when it is likely to be #40 (or not ranking at all).

Can SEO metrics order results correctly?

To measure how closely popular SEO metrics come to this ideal, I first pulled the top 40 Google results for 25 keywords randomly selected from a batch of 2 million keywords used in PPC advertisements. For each of the resulting 1,000 URLs, I collected the CanIRank Ranking Probability score, SEOMoz Page Authority, Google PageRank, MajesticSEO CitationFlow, and SerpIQ Competition Index. I then threw out all the URLs where SEOMoz didn’t have any data, leaving just under 700 results.

For each keyword, I then ranked the URLs according to each metric and calculated the correlation between the rankings predicted by the metric, and the actual ranking in the Google results. Statistics geeks will recognize this as Spearman’s Rank Order correlation coefficient. A coefficient of 1 suggests the rank order of the two variables is perfectly correlated, while a coefficient of 0 means that there is no correlation.

Here’s an example of what the keyword table looks like for one of the keywords, “bankruptcy NJ” :

You may recognize this methodology as similar to past studies conducted by SEOMoz to determine the correlation between various web page attributes and high Google rankings, in which they’ve uncovered correlations ranging from 0 for keywords in an H1 tag to 0.03 for content length to 0.25 for # of linking root domains (the highest correlation). You may be relieved to discover that, in this data sample at least, all of the SEO metrics outperformed nearly all of the raw page attributes. Woo-hoo! Perhaps there’s something to this SEO stuff after all.

Best Keyword Difficulty Tool Results

In order from highest mean correlation to lowest, let’s examine the suitability of each individual metric in more detail. Please keep in mind that this is an apples-to-oranges comparison as each of these metrics is designed to capture something slightly different, and as far as I know only CanIRank is even attempting to capture all of the factors that go into determining a URL’s competitiveness.

CanIRank – Ranking Probability – 0.57

![]() CanIRank’s Ranking Probability scores had the highest correlation to Google search results, with a mean rank order correlation coefficient of 0.57. Of course, this is to be expected as Ranking Probability is really the only measure intended to capture the complete picture of search engine ranking factors. Although 0.57 represents a fairly strong relationship between the variables (by comparison the correlation between IQ and academic grades is around 0.5), I think we can still do much better, and there are a number of improvements in the works which I think will push this number even higher.

CanIRank’s Ranking Probability scores had the highest correlation to Google search results, with a mean rank order correlation coefficient of 0.57. Of course, this is to be expected as Ranking Probability is really the only measure intended to capture the complete picture of search engine ranking factors. Although 0.57 represents a fairly strong relationship between the variables (by comparison the correlation between IQ and academic grades is around 0.5), I think we can still do much better, and there are a number of improvements in the works which I think will push this number even higher.

Moz – Page Authority – 0.27

Moz (previously known as SEOMoz) describes their Page Authority metric as “Moz’s calculated metric for how well a given webpage is likely to rank in Google.com’s search results”. There are some pretty smart folks up in Seattle working for Moz, and it shows in the excellent performance of their Page Authority metric, which had the second-highest correlation with Google search results. This is especially impressive, considering that (as far as I know, at least) Page Authority is not intended to capture any relevancy factors. In their own studies, Moz has calculated Page Authority correlations as high as 0.38; I’m not sure why the result is so much lower in my study.

Moz (previously known as SEOMoz) describes their Page Authority metric as “Moz’s calculated metric for how well a given webpage is likely to rank in Google.com’s search results”. There are some pretty smart folks up in Seattle working for Moz, and it shows in the excellent performance of their Page Authority metric, which had the second-highest correlation with Google search results. This is especially impressive, considering that (as far as I know, at least) Page Authority is not intended to capture any relevancy factors. In their own studies, Moz has calculated Page Authority correlations as high as 0.38; I’m not sure why the result is so much lower in my study.

Google – PageRank – 0.21

Google’s PageRank, measured on a 0 to 10 scale and intended to capture the relative importance of a web page, was once the only game in town as far as metrics go. Unfortunately, it’s become much less reliable in recent years, and most SEOs don’t put much weight in it anymore. However, as a predictor of Google rankings, PageRank scored the third-highest correlation, suggesting that it does indeed still seem to play a role. I used RankSmart’s handy bulk PageRank checker to collect the data for this study.

Google’s PageRank, measured on a 0 to 10 scale and intended to capture the relative importance of a web page, was once the only game in town as far as metrics go. Unfortunately, it’s become much less reliable in recent years, and most SEOs don’t put much weight in it anymore. However, as a predictor of Google rankings, PageRank scored the third-highest correlation, suggesting that it does indeed still seem to play a role. I used RankSmart’s handy bulk PageRank checker to collect the data for this study.

MajesticSEO – CitationFlow – 0.19

CitationFlow is Majestic’s version of Google PageRank, described as “a number predicting how influential a URL might be based on how many sites link to it.” As with PageRank, CitationFlow gives more weight to links from pages that themselves have many inbound links. As a predictor of Google rankings, CitationFlow was about as useful as PageRank, with a mean correlation of 0.19.

CitationFlow is Majestic’s version of Google PageRank, described as “a number predicting how influential a URL might be based on how many sites link to it.” As with PageRank, CitationFlow gives more weight to links from pages that themselves have many inbound links. As a predictor of Google rankings, CitationFlow was about as useful as PageRank, with a mean correlation of 0.19.

SerpIQ – Competition Index – 0.17

SerpIQ’s Competition Index is “designed to give you a quick snapshot of the average difficulty of a Search Engine Rankings Page (SERP). The score is calculated by looking at the main ranking factors for a site, mainly: domain age, page speed, social factors, on page SEO, backlink amount and quality, and PageRank.” Unfortunately, I wasn’t able to get a Competition Index score for URLs beyond the top 10 results for each keyword, so SerpIQ’s correlation of 0.17 was calculated based upon the top 10 results only.

SerpIQ’s Competition Index is “designed to give you a quick snapshot of the average difficulty of a Search Engine Rankings Page (SERP). The score is calculated by looking at the main ranking factors for a site, mainly: domain age, page speed, social factors, on page SEO, backlink amount and quality, and PageRank.” Unfortunately, I wasn’t able to get a Competition Index score for URLs beyond the top 10 results for each keyword, so SerpIQ’s correlation of 0.17 was calculated based upon the top 10 results only.

Conclusion

With a rank order correlation coefficient of 0.57, CanIRank’s keyword difficulty accuracy is high enough for us to feel comfortable claiming that Ranking Probability scores can be useful in assessing whether or not a web page is competitive for a given keyword. Of course, no one outside of Google knows what factors are included in their algorithm, and they have access to all kinds of data (such as Chrome, Google analytics, Android, SERP click-tracking, etc) that other companies do not have. Our goal is not to replicate their algorithm, but simply to find out whether a ranking model built upon publicly available information retrieval concepts would correlate strongly enough to draw useful conclusions. We think this study demonstrates that this is possible, and with further work, we hope CanIRank will be able to provide even more accurate keyword difficulty and ranking predictions. Utilizing keyword research tools can be a huge asset to your SEO initiatives. Happy ranking!

[…] For more information on keyword difficulty, check out this post. […]

If I understand it correctly, Can I rank?’s keyword difficulty metric would be calculated for a combination of a given URL and a keyword. Is this correct?

What if I still do not have a website or webpage? Can I get the “general” difficulty of the keyword? I guess one could calculate the average of the metric for the top 10 or 20 ranking URLs for the keyword in Google SERP.

Also, can one specify the country for country-specific SERPs?

Sorry if these questions are already answered in other posts.

Thanks

Juan

Hi Juan,

Yes, that’s correct. “Generalized” keyword difficulty is a myth rooted in an oversimplified understanding of Google’s search ranking algorithms: they’re not something linear or points-based like sports team rankings or class rankings. #1 doesn’t always have more points than #2 which has more points than #3 and so on…

So instead we look at the suitability and competitiveness of your specific website for a search query. MUCH more accurate, and it lets us identify specifically which areas are holding you back, so that you can fix them!

If you don’t have a website yet, you can still get a much more accurate assessment of keyword difficulty for your situation by picking any random reference URL that is similar to the site you plain to build. More details on this strategy here: http://nopassiveincome.com/canirank-review/

Yes, you can specify which county you’d like to target: http://canirank.freshdesk.com/support/solutions/articles/4000034547-how-do-i-target-the-results-to-my-local-country-i-m-only-interested-in-ranking-in-google-co-uk-ca-

Matt,

I have a question on how a correlation is drawn between CanIRank RP and MOZ PA. Moz PA is not keyword specific, while CanIRank RP is. Why wouldn’t MOZ keyword difficulty be used instead of MOZ PA? Wouldn’t that metric be more relevant in this study? I can see one challenge is that this study is specifically addressing ranking of a URL to a given KW, whereas MOZ PA address the URL, and MOZ difficulty addresses the KW. Combining the two somehow would give a more accurate result, I would think. Being that this article is on “Best Keyword Difficulty Metric”, it just seems MOZ keyword difficulty tool would be more relevant than MOZ PA.

I look forward to your input.

Thank you,

Ralph.

Good question Ralph! If we looked at keyword-level metrics, there’d be no way to assess accuracy. However, with URL-level metrics, we know that a metric is at least directionally correct if a higher score on that metric correlates to a higher ranking in Google search results. Lots of high-scoring URLs means a challenging keyword! This is how keyword difficulty tools work, so in Moz’s case Page Authority is the primary URL-level metric they’re looking at to calculate keyword difficulty (along with Domain Authority). As I wrote in the post, it’s not an apples to apples comparison because CanIRank is the only one that’s even trying to look at relevancy and how your specific URL/website compares, but one of the things we wanted to demonstrate is how advantageous such an approach can be. Hope that helps clarify!

Pretty nice article Matt. I didn’t know CanIRank is so precise.

Hey there, You’ve done a great job. I’ll certainly digg it and recommend it to my friends. Thank you!

Thank you! We appreciate you!

Yes, i agree that Keyword Planner is the best free tool but ahref can also help with many other things.