When Google releases a new algorithm they describe as “one of the biggest leaps forward in the history of search“, SEOs pay attention.

Good SEO consultants and their clients will want to know how these changes will impact SEO strategy, and if there are any actions that can be taken to improve rankings under the new algorithm.

In this article, we’ll provide more background on Google’s adoption of Bidirectional Encoder Representation from Transformers (BERT), show examples of how it changes rankings for specific queries, and provide recommendations for how you can better align your content strategy with Google’s improved language understanding capabilities.

There have already been many articles written about BERT — why one more? First, I’m writing from the perspective of a Stanford-trained machine learning engineer turned SEO. While I’m not an NLP specialist, I’ve previously built neural nets, including one that could predict the ranking of randomized Google results with 60% accuracy. I also keep a folder of “Google Fails”, which provided some interesting before and after examples demonstrating BERT’s impact. At the very least, I can promise this post won’t just be reiterating Google’s BERT launch release and the same examples you’ve seen everywhere else.

TLDR; Everything SEOs need to know about BERT in 100 words:

- Unlike previous relevancy algorithms that treated texts like a “bag of words”, BERT better understands how relationships between words changes meaning and query intent

- Since BERT understands query micro-variations that had previously been ignored, it should benefit sites with a lot of in-depth topical content that were previously outcompeted by higher authority sites with less topical coverage

- Because CanIRank’s on-page optimization recommendations are derived from the current top-ranking results, our software will show you exactly how to optimize your content for post-BERT query intent

What is BERT?

Bidirectional Encoder Representations from Transformers, or BERT, is a new method for pre-training neural network-based language understanding models that better accounts for the fact that a word’s meaning can change depending on the context, a concept known as polysemy.

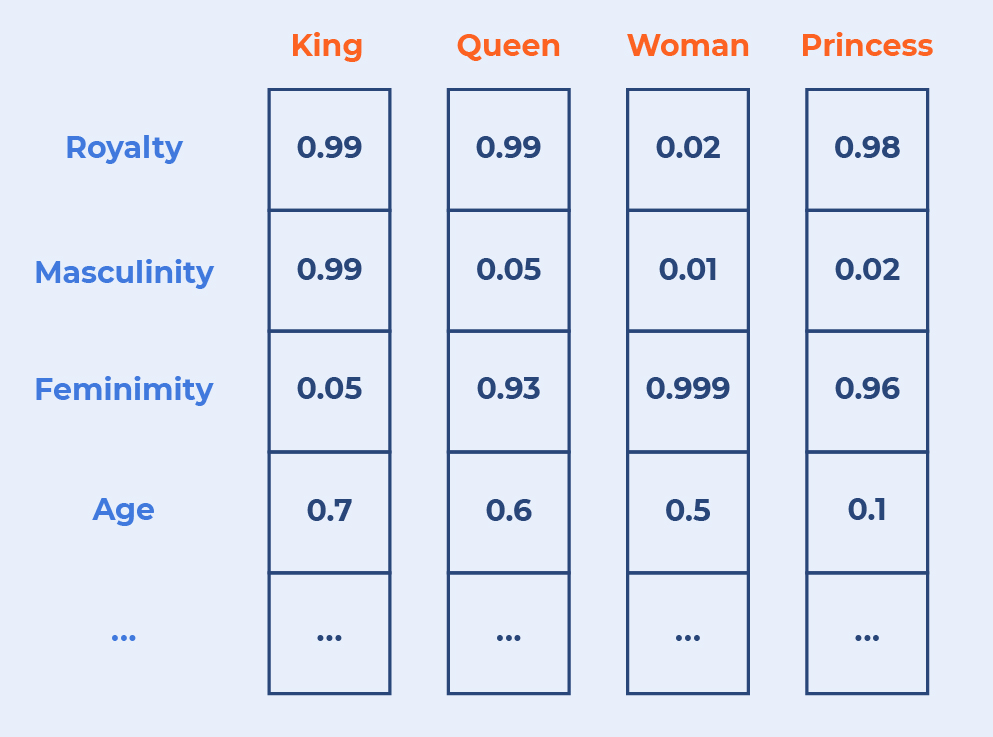

Previous language understanding models, such as word2vec, relied on vector representations of different words called word embeddings. Word embeddings allow computers to “understand” meaning behind words, as well as their relation to one another:

While powerful, word embeddings generated by these methods had significant limitations because every occurrence of a word was given the same word embedding regardless of context. “Play” has the same word embedding whether the sentence was “my son is starring in his class play”, “did you catch that amazing play in the 7th inning?” or “enough learning about NLP; let’s go outside and play!”.

Other models need to be used to refine meaning based on context. BERT is not the first of those models, but it can do something the others can’t: learn the meaning of a word based on context both before and after the target word (“bidirectional”). BERT’s output includes representations of word meaning similar to word2vec, but adds additional neural net layers that serve to encapsulate meaning due to syntax as well as higher-level aspects of language understanding, such as dependency, negation, agreement, and anaphora.

So if you want to be the obnoxious know-it-all at an SEO party, time to stop dropping “n-dimensional vector word embeddings” and start crowing about “deep contextualized word representations”.

A Giant Leap for Query Understanding?

In order to understand why BERT is a significant breakthrough for both Google and SEOs, it helps to look back on the evolution of search engine relevancy algorithms.

Early search engines primarily paid attention to the presence of certain keywords or keyword variations. For SEOs, this meant life was easy: do your keyword research, throw any variation with search volume into a title tag and add a few paragraphs of fluff content. And boom! Page 1 rankings. Many content strategies focused on cranking out lots of pages, each targeting keyword micro-variations.

Boring to implement, terrible for users… But incredibly effective.

This approach worked so well that some started to take it too far, as content farms like eHow cranked out tens of thousands of vapid articles, many covering the same topic but targeting a slight keyword variation.

As Google worked to quash content farms and their proliferation of low-quality content, the pendulum swung far in the other direction: Google began to pay more attention to other signals like authority and site quality, and relevancy shifted to broader “meaning-based” semantic relevancy algorithms rather than keyword usage.

Semantic relevancy algorithms like Google Hummingbird work by understanding the relationships between different words and phrases. For the most part, they treat texts as a “bag of words” where changes in meaning due to the position of one word in relation to another is largely ignored.

With semantic relevancy algorithms, search results for “SEO tools”, “best SEO tools”, “top SEO tools” and even “SEO tool reviews” look nearly identical because Google assumes these searchers are all trying to accomplish basically the same thing.

Query intent matters as much as specific keywords.

“With the coming of BERT the familiar saying of “Create Great Content With User Intent In Mind” rings much more true! The game has not changed but the system has got much more advanced forcing more SEOs to play the game the way it’s made to be played.”

David Freudenberg

Founder

Seowithdavid

While largely positive, it sometimes felt like Google would ignore parts of long-tail queries, especially if they included common words. As a result, SEOs found themselves competing for the same limited subset of query intents as all their competitors. Great if you’re a high authority site like the NY Times; not so much for the niche specialist.

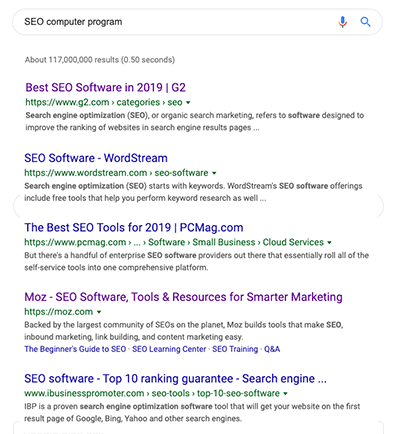

Crappy keyword difficulty tools often spit out “red herring keywords” that seem like they’re going to be easy because no one else is optimized for them. But then you find out that even if you target “SEO computer program”, you’re still competing against all of the sites optimized for “SEO software” whether you want to or not.

BERT is still very much a meaning-based relevancy algorithm. But for longer conversational queries where the relative position of individual words could change the query meaning, it should no longer feel like Google is ignoring a portion of your query.

For SEOs, this certainly doesn’t represent a return to the glory days of long-tail keywords, but it should allow you to successfully capture more long-tail traffic if your content is particularly in-depth or covers unique aspects of a topic.

To understand this better, let’s take a look at some examples of search results before and after BERT.

Examples of How BERT Changes Google’s Query Understanding

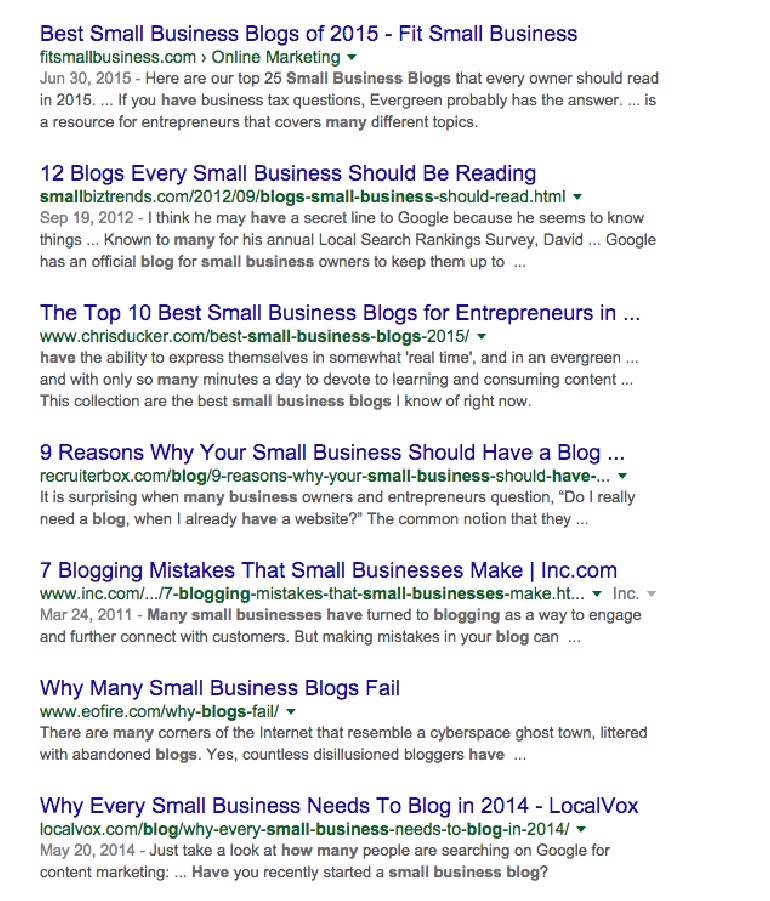

QUERY: “How many small businesses have a blog?”

|

Before |

After |

|---|---|

|

|

Before BERT, Google got so excited about the concept of “small business blogging” in this query, that they completely ignored the “how many” and failed to return any results addressing the question.

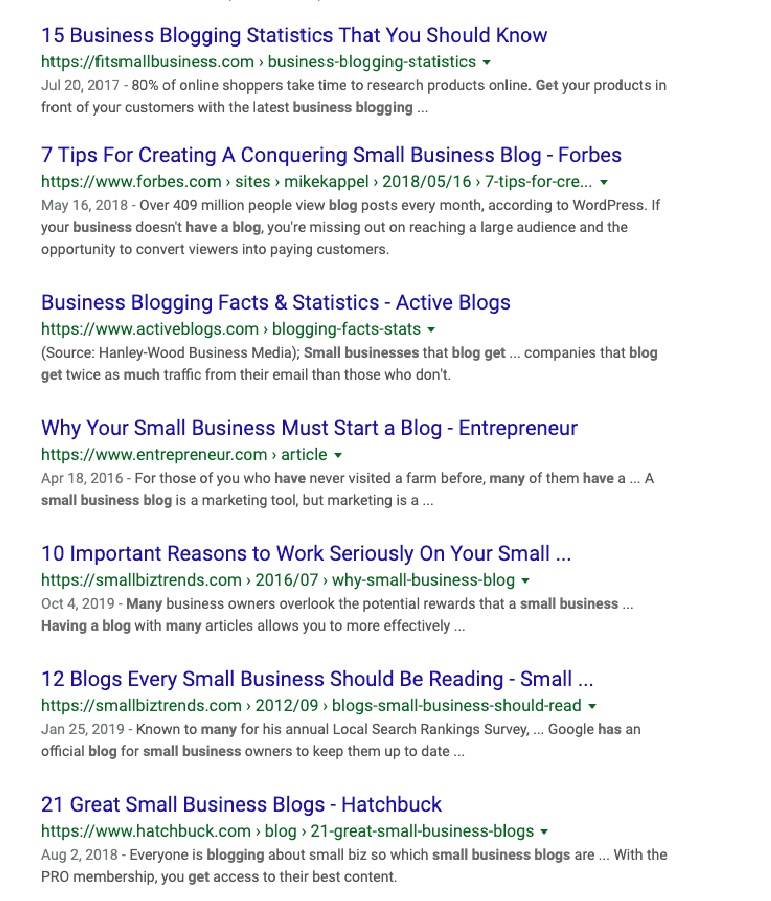

After BERT, Google now understands the query intent is to find statistics about blogging, and they even identified several closely related questions like “How many businesses have a blog?” and “What percentage of small businesses have a website?”

As impressive as that improvement is, none of the results actually answer the question. Moreover, Google still seems a little uncertain about the intent here as a majority of the results give reasons to start a blog or tips on starting a small business blog rather than statistics.

Results #1, #3, and #8 come closest to addressing the true intent. It’s interesting to note that they contain a lot of questions and answers and are very quantitative, which may show BERT’s ability to connect the “how many” to statistics.

Related Terms for this Query?

CanIRank’s on-page optimization recommendations reflect the new “dual intent” nature of this query, and they include Related Terms focused on the true query intent as well as Google’s misunderstood intent focused on blog benefits and tips:

- blog statistics

- business blogging statistics

- how many businesses have a blog

- what percentage of businesses have a website

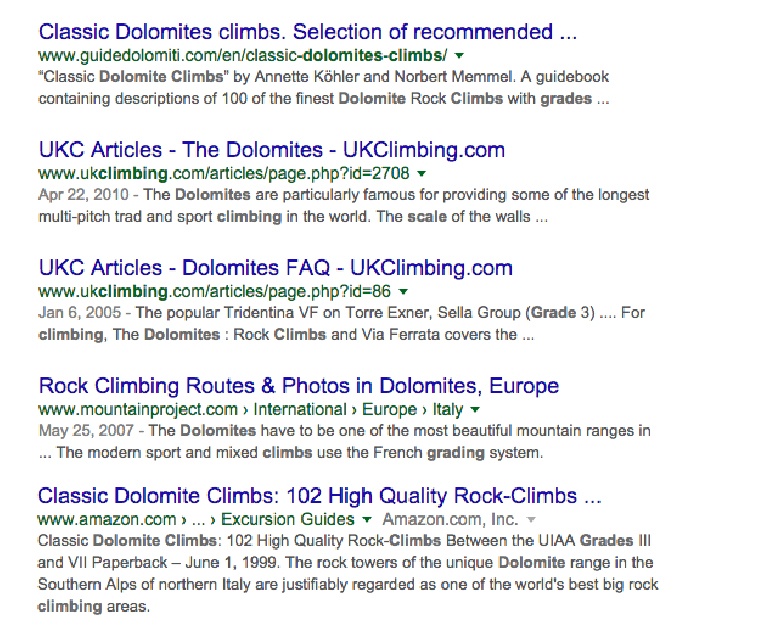

QUERY: Dolomites climbing grades

|

Before |

After |

|---|---|

|

|

I searched this query because I wanted to find out which of the many systems used to grade the keyword difficulty of climbs was employed in the Dolomites in Italy. That way I could translate back to the grading system I was accustomed to (the Yosemite Decimal System used throughout the US), and determine which Dolomite climbs I might be able to do.

Previously, “grades” was de-emphasized and most of the results focused on “Dolomites climbing”. Before BERT, over half the results were identical for “Dolomites climbing grades” and “Dolomites climbing” — a classic example of how broad coverage of a topic was often given short-shrift by the old algorithm.

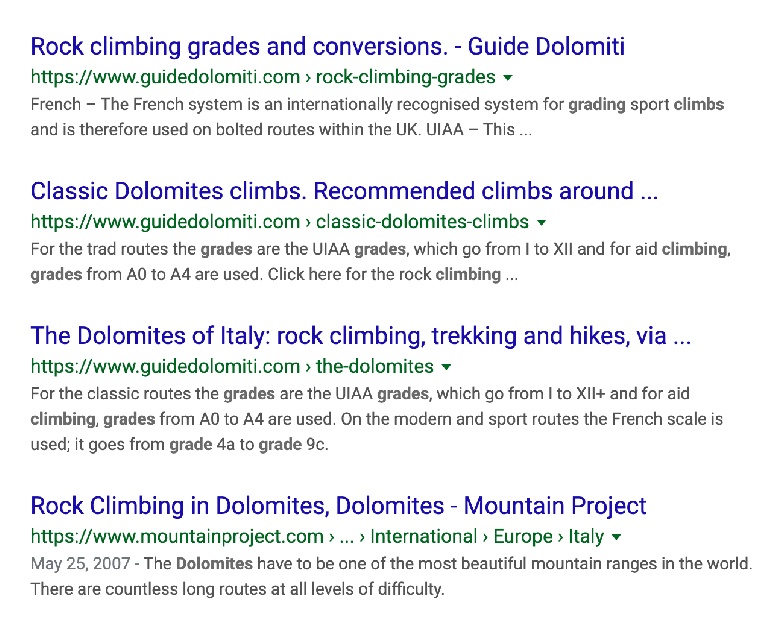

Now, Google gives greater weight to “grades”, and understands that “grades” coming after “climbing” completely changes the intent of the query. The featured snippet does a good job of explaining that there are multiple grading systems used in the Dolomites. The #1 result is a page focused entirely on translating one grade to another, but unlike the featured snippet, it doesn’t explain which systems are used in the Dolomites, and where. (Instead of being a page about Dolomites climbing grades, it’s a page about climbing grades in general, on a website about the Dolomites.)

Interestingly, several new results don’t explain the official grading system at all. Instead, they talk in abstract terms about how “hard” or “easy” given climbs might be for their guided clients.

By paying more attention to the full query, Google has improved query diversity. Rather than just duplicating the results for “Dolomites climbing”, they’ve created opportunities for smaller sites to compete here if they write more specifically about Dolomites grading systems.

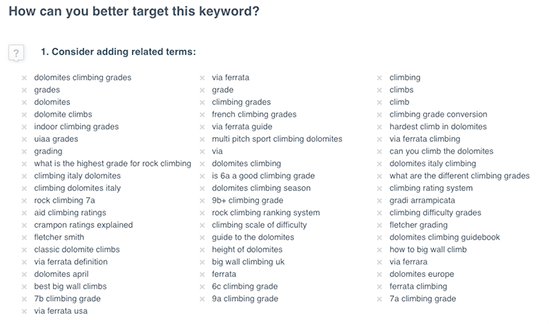

Related Terms for this Query?

Related Term suggestions from CanIRank’s on-page optimization tool fully understood the new BERT intent and include:

- climbing grade conversion

- climbing scale of keyword difficulty

- climbing difficulty grades

- what are the different climbing grades

- aid climbing ratings

- UIAA grades

- rock climbing ranking system

- french climbing grades

- climbing rating system

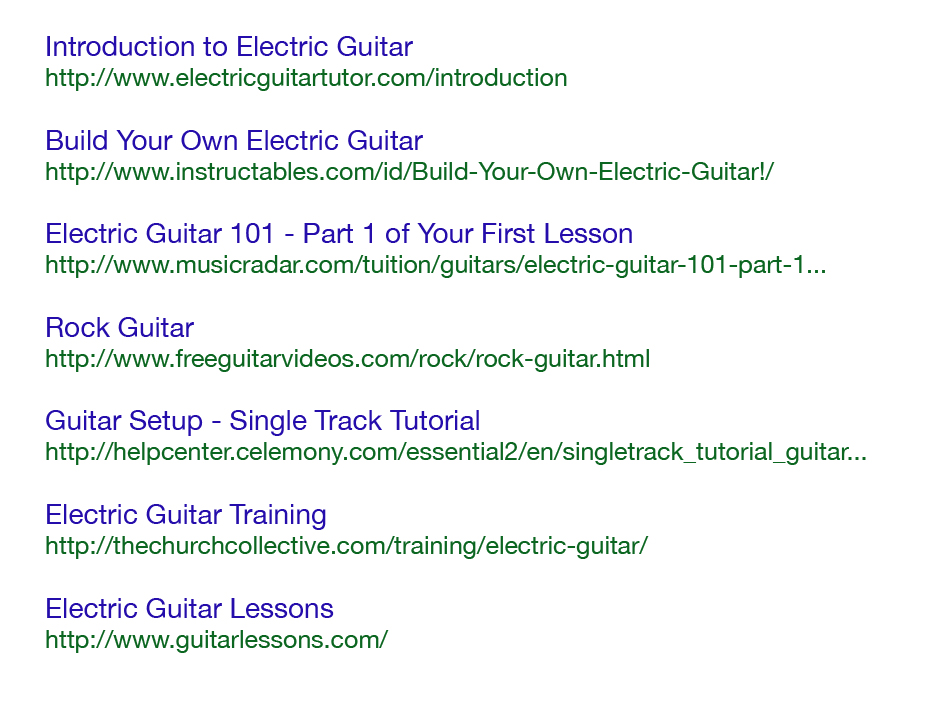

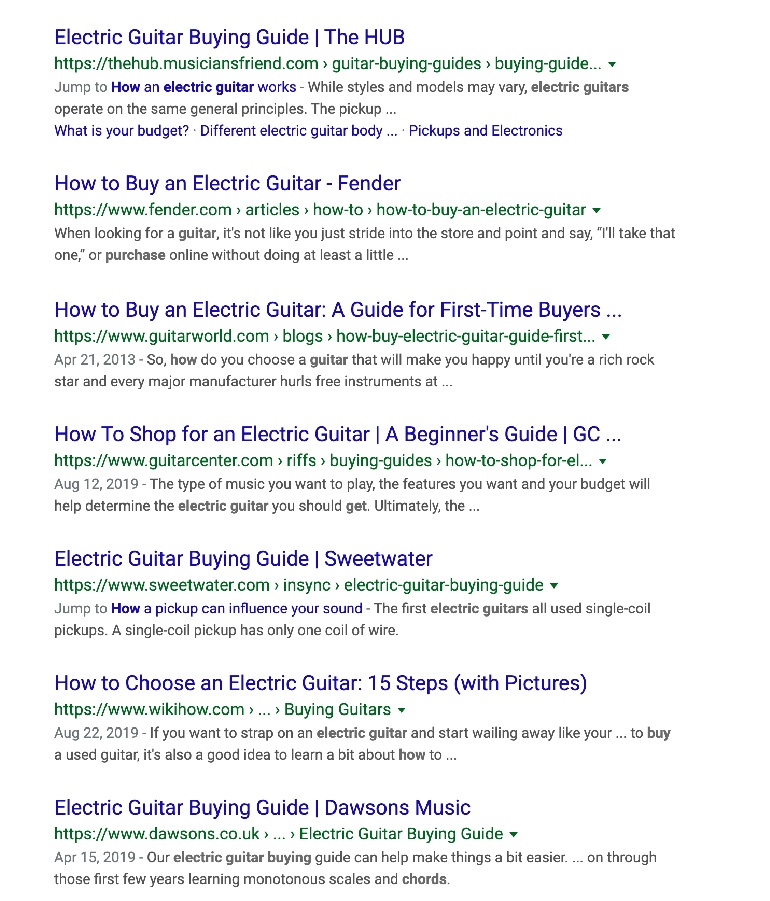

Query: Tutorial to buy an electric guitar

|

Before |

After |

|---|---|

|

|

Prior to Google BERT, all results for this query were electric guitar tutorials, even though the query intent was actually learning how to buy an electric guitar, and not how to play one. Google picked up on “tutorial” and “electric guitar”, but failed to understand that the admittedly awkward formulation “tutorial to buy” meant that buying was a key part of the query intent.

Now almost all top results are focused on the right question! This one is definitely a win for BERT, and a win for eCommerce stores with an electric guitar buying guide who previously would have found themselves competing against sites offering lessons.

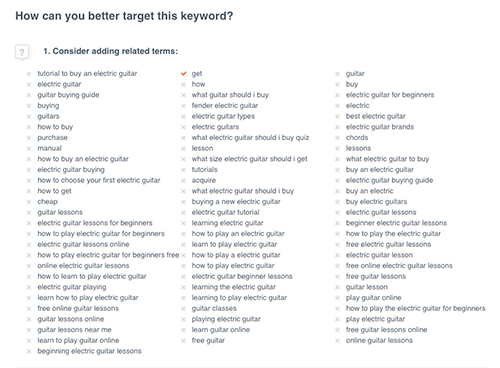

Related Terms for this Query?

Prior to Google BERT, CanIRank’s Related Terms focused on the intent targeted by Google, and included:

- guitar lessons

- electric guitar tutorial

- how to play electric guitar

- electric guitar chords

Now that Google correctly understands this query, simply refreshing the CanIRank on-page optimization report has generated a totally new set of Related Terms better aligned with the new intent:

- electric guitar buying guide

- what guitar should I buy

- how to buy an electric guitar

- how to choose your first electric guitar

- what size electric guitar should I get

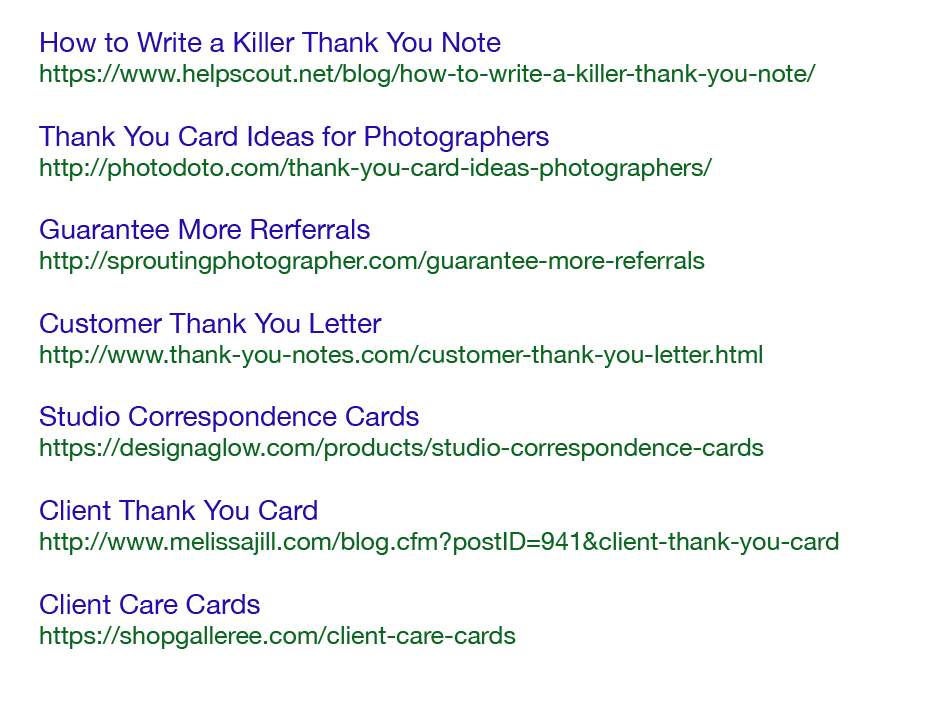

QUERY: Thank you card from photographer to client

|

Before |

After |

|---|---|

|

|

While the old results did have some relevant results, one of the top-ranking pages was https://www.helpscout.com/blog/how-to-write-a-killer-thank-you-note/ This is a classic example of how less nuanced meaning-based relevancy algorithms benefitted sites with high domain authority, even when they weren’t actually addressing the question. HelpScout has the highest Website Strength of any site on page 1, but they certainly shouldn’t be ranking #1. Unlike other results, they don’t address the specific situation of a photographer writing a thank you note to their client.

After BERT, the HelpScout post has been pushed down to page 2, and all page 1 results are specific to photography. That’s another win for BERT and a huge relief to those of us writing in-depth content on sites that don’t always have the highest domain authority.

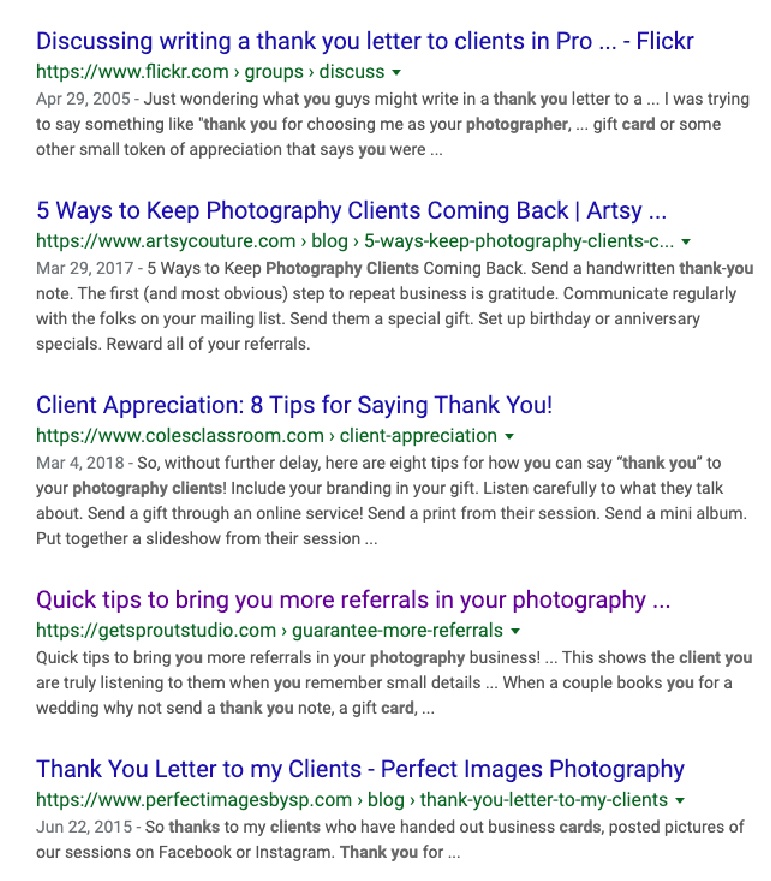

Related Terms for this Query?

As Google’s understanding of query intent shifts, we need to shift our usage of Related Terms to ensure that we’re addressing the intent as Google understands it, not just as we understand it.

CanIRank’s old Related Term recommendations did include some photography-specific terms, but were more focused on general business thank you notes:

- Thank you card

- photographer thank you card template

- business thank you notes

- client thank you

Now that Google understands the query better, our Related Term suggestions are also more in line with the correct intent:

- customer thank you note examples

- client thank you cards

- photographer thank you card

- photographer thank you to client template

Can you optimize for BERT?

If I had a dollar for every time I read in an article on the BERT launch something like “you can’t optimize for BERT, because it’s machine learning” (or similar), I could take our entire team out to lunch, guacamole included.

On the one hand, I understand this perspective because as BERT is mainly about improving Google’s query understanding, it doesn’t change how Google determines whether or not a page is relevant. The fundamentals of on-page SEO remain unchanged.

However, that certainly doesn’t mean this news isn’t actionable.

BERT noticeably improves Google’s ability to understand natural language, but they’re still far from perfect. If you feel your job as an SEO is to ignore search engine capabilities and “just write for humans”, this won’t change what you do. But you will probably be outranked by SEOs who are able to write for humans while also keeping in mind the limitations and capabilities of search engines.

A good example of how writing for humans and search engines can diverge: this blog post. Including 4 specific examples of queries where BERT improved Google results certainly makes the post more useful for our human readers. But unfortunately search engines (even post-BERT) aren’t able to understand how 500 words on the Dolomites, small business blogging, and guitar tutorials improves the quality or usefulness of an article about BERT. Including those examples dilutes our optimization and makes this post much less likely to rank well.

We optimize to help make search engines’ job easier, and should bear in mind the current imperfect reality of relevancy algorithms.

With that mindset, BERT subtly shifts the ideal content strategy, and it should also change how you do on-page optimization. So even if BERT doesn’t change on-page optimization fundamentals, you can “optimize” for it in the sense that can adjust your SEO strategy and optimization process. While these shifts are subtle, I believe SEO consultants and content creators who understand this before others have an opportunity to gain some additional traffic for their clients.

How to Adapt Your Content Strategy

By better understanding variations between queries, BERT should reward a content approach that thoroughly covers a topic, and attempts to address lots of different query intents.

A good approach would be to start with a Customer Research Journey analysis so you have a full understanding of the questions your target customers are asking long before they begin querying actual products and services. Focus on knowledge rather than keywords.

FAQs and encyclopedic reference material are two content types that naturally tend to address a lot of different intents and should do better after this update. After all, BERT learned its understanding of language from Wikipedia.

It’s possible the shift towards BERT will slow or reverse the trend towards long form content that we’ve seen in the past few years. As with the example queries above, some long, highly-linked pieces of content are now being replaced by shorter, lower authority content that more directly addresses the query intent.

Higher authority sites that used to rank for queries where Google was ignoring a lot of the nuance may find themselves losing long tail traffic, like NYTimes.com:

Feels huge to us at NYT. But it coincided with the move to mobile-1st indexing — so we’re not sure what’s causing changes

— Hannah Poferl (@HannahPoferl) October 30, 2019

We’ve long advised CanIRank Full Service clients to pursue a strategy of establishing deep niche expertise with Supporting Content that covers many different aspects of your primary topic. This approach should be even more powerful now that more specific content has more opportunities to get noticed.

How to Change Your On-Page Optimization Process

Although Google BERT doesn’t change how Google determines the relevancy of a page, it should change how you do on-page optimization.

Huh? How is that?

The most effective on-page optimization processes in 2019 have focused on understanding and satisfying query intent. It’s no longer about a keyword. It’s about what a searcher is trying to accomplish.

And prior to BERT, if Google misunderstood the intent of a query, we would need to optimize for that incorrect intent. In other words, in order to optimize for “tutorial to buy an electric guitar”, we would need to use content about electric guitar lessons. Often queries contain multiple intents, and on-page SEO becomes a question of trying to be the absolute best for one intent or offering a little help for each of them.

As BERT subtly shifts Google’s understanding of 10% of queries, you’ll need to make sure that you’re adapting your optimization process A good way to get a feel for how Google interprets a query is to read over the top results and look over the People also ask and Related Searches.

If you’re looking for a shortcut that is faster and easier than manually reading through all the top results to identify common themes, you should know this is exactly what CanIRank’s on-page optimization tool does. In addition to providing themes, entities, and phrases commonly used by top-ranking results, it also pulls in “People also ask” questions and Related Searches, so you can see at a glance which questions your content should answer and which topics you should cover.

Since CanIRank’s recommendations are based on current top rankings, they automatically shift whenever Google introduces an algorithm change like BERT. We provide targeted actionable Opportunities relative to your business, and how the algorithm evolves

Query intent has always been essential to SEOs. This update specifically changes how Google interprets intent. Good optimization will address all of the additional nuances in intent that Google is now capable of deciphering.

Really enjoyed reading this article, Matt. The specific before and after examples provide great context to understand the change. I especially appreciated the tactical advice to create in-depth content that answers several queries around a given topic. That’s been the trend for awhile but this update makes it even more beneficial. Nice to know that newer sites will potentially have an easier time ranking when covering niche keywords than before.